AI-Powered Data Orchestration: How Intelligent Pipelines Are Redefining Data Engineering in 2026

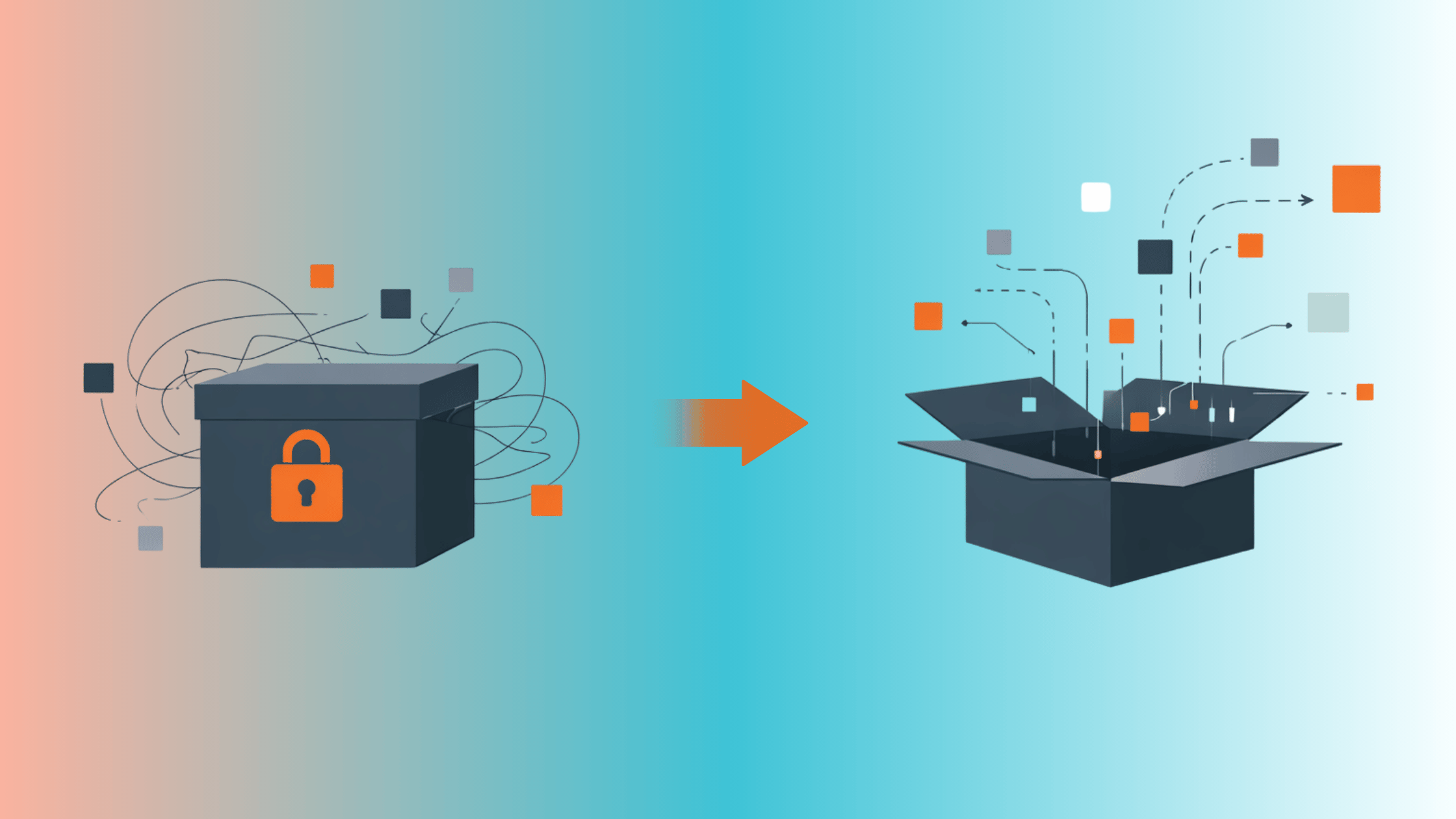

As data ecosystems grow more complex, traditional orchestration can no longer keep up. AI-powered data orchestration is reshaping how modern enterprises build, monitor, and optimize data pipelines—shifting from reactive workflows to intelligent, self-healing systems. This blog explores what’s changing, why it matters, and how data leaders can prepare for the next era of data engineering.

.avif)

The data landscape in 2025 stands at a pivotal point. With the global AI orchestration market projected to grow from $11 billion in 2025 to over $30 billion by 2030, enterprises are entering a new era of intelligent automation.

This transformation goes far beyond traditional ETL or workflow tools—it’s about AI-driven, self-healing, and context-aware data systems that reimagine how information moves, learns, and adapts within an organization.

Welcome to the new age of AI-powered data orchestration, where data pipelines think, predict, and optimize themselves.

From Data Engineering to Data Intelligence

The once-clear boundary between data engineering and AI engineering has all but disappeared.

Today’s systems don’t just move data—they interpret, contextualize, and optimize it in real time.

Traditional orchestration platforms were designed for predictable workflows: extract, transform, load, repeat.

But 2025 marks the rise of AI orchestration, where pipelines are dynamic, adaptive, and capable of learning from operational context.

In short:

AI orchestration coordinates not just data flows, but the intelligence behind them—linking models, metadata, and observability into one unified ecosystem.

The $3.1 Trillion Problem Behind the Revolution

Data quality remains one of the most expensive unsolved challenges in enterprise AI.

Poor-quality data costs the U.S. economy $3.1 trillion annually, draining up to 30% of enterprise revenue through inefficiencies and decision errors.

Static validation scripts and manual checks can’t keep up with modern data velocity.

The solution? AI-powered data quality orchestration—where intelligent agents continuously monitor, repair, and optimize pipelines.

Five Pillars of Modern AI-Orchestrated Data Systems

1. Context Engineering: The Hidden Multiplier

In 2025, the most successful AI pipelines are powered by context, not just data.

Context engineering—the art of preparing, optimizing, and maintaining context data—has become a defining skill for AI and data teams.

Why it matters:

- Cost Efficiency: Poor metadata and incomplete embeddings inflate compute costs by up to 400x during model runs.

- Reliability: Misaligned context causes unpredictable LLM responses and retrieval errors.

- Performance: Teams monitoring upstream context (before model ingestion) see exponential improvements in accuracy and cost.

Tools like LangChain, LlamaIndex, and GraphRAG frameworks are reshaping how organizations manage and inject context into models.

2. Autonomous Orchestration with AI Agents

Orchestration has evolved from static task scheduling to autonomous, self-optimizing workflows.

AI agents now coordinate tasks, make decisions, and adapt to changing data conditions—all in real time.

Core capabilities include:

- Predictive Failure Prevention:

Tools like Dagster, Prefect, and Flyte use AI-driven metadata and lineage analysis to predict job failures before they occur. - Natural Language Pipeline Creation:

With frameworks like LangChain and Microsoft AutoGen, engineers can now design or update data pipelines using conversational interfaces. - Self-Healing Systems:

AI automatically reruns failed tasks, adjusts schedules, or switches compute resources—eliminating the need for manual intervention.

This agentic approach turns orchestration into a living system, continuously optimizing itself to balance performance, cost, and reliability.

3. Real-Time Data Quality Automation

AI is redefining data quality management from static rule-based systems to adaptive, learning-based frameworks.

Key advancements:

- ML-Driven Validation:

Machine learning models detect anomalies in data shape, schema drift, or missing values—without predefined rules. - Intelligent Prioritization:

Systems learn which data sources matter most, reducing alert fatigue and focusing human review where it matters. - Automated Root Cause Analysis:

When a metric drifts or data breaks, AI traces the lineage and proposes fixes.

Platforms like Monte Carlo, WhyLabs, and Unravel Data exemplify this shift toward data observability + intelligence—where the system doesn’t just flag issues, it helps solve them.

4. Distributed and Cloud-Native Orchestration

Modern enterprises operate across multiple clouds, regions, and compliance zones.

The new frontier is distributed orchestration—managing data flows across hybrid and multi-region setups without losing visibility or control.

Benefits include:

- Performance & Locality:

Run pipelines near the data source for faster execution and compliance. - Unified Policy Control:

Infrastructure-as-code templates replicate orchestration policies globally. - Cost Optimization:

AI agents dynamically route workloads to the most efficient region or compute tier.

Cloud-native stacks like Airflow, Dagster, and Prefect Cloud are evolving toward this distributed paradigm, supporting 24x7 global data operations at scale.

5. Unified Observability: Data Meets AI

2025 is seeing the merging of Data Observability and AI Observability—providing end-to-end insight from data ingestion to model inference.

Modern observability platforms now offer:

- Real-time pipeline drift detection and anomaly forecasting

- Cross-stack lineage tracking from data to model outputs

- Predictive analytics for proactive maintenance

- Integration with FinOps metrics to balance cost, accuracy, and sustainability

This convergence ensures that AI models stay trustworthy as they evolve alongside dynamic, constantly changing datasets.

Technology Stack: Platforms Powering the Shift

- Apache Airflow: Continues to dominate open-source orchestration, now with AI-driven task scheduling and failure prediction.

- Dagster: Leads in structured observability, data testing, and reproducible pipelines.

- Prefect: Combines open-source flexibility with cloud-native deployment for enterprise scalability.

- DataChannel & Domo: Integrate orchestration with compliance checks and workflow automation.

- Microsoft AutoGen & SuperAGI: Drive multi-agent coordination for intelligent, autonomous workflows.

These platforms demonstrate how AI and orchestration are no longer separate layers—they are now one integrated intelligence fabric.

Measuring Impact: ROI of AI-Powered Orchestration

Enterprises adopting AI-driven orchestration are seeing measurable improvements:

- Deployment times reduced by 90%

- Firefighting effort down by 50–99% through automated remediation

- 50% more workloads delivered per budget unit via predictive resource allocation

- 3.5x higher conversion rates for AI-optimized data pipelines

By replacing manual processes with machine intelligence, organizations are achieving both operational excellence and financial efficiency.

Implementation Roadmap: How to Get There

1. Assess and Foundation (0–3 Months)

- Audit data pipelines and identify manual bottlenecks

- Choose orchestration platforms (Airflow, Prefect, Dagster, etc.)

- Establish baseline data quality and lineage metrics

2. Pilot Smart Automation (3–6 Months)

- Target high-failure pipelines for AI-driven orchestration

- Deploy real-time anomaly detection and quality scoring

- Implement self-healing workflows for quick wins

3. Scale and Optimize (6–12 Months)

- Expand to multi-domain and multi-region orchestration

- Implement context engineering for AI/ML workloads

- Establish DataOps + MLOps Centers of Excellence

4. Innovate and Evolve (12+ Months)

- Introduce autonomous AI agents for full lifecycle automation

- Integrate GraphRAG for contextual decision-making

- Deploy predictive maintenance and FinOps-aware orchestration

What’s Next: Beyond 2025

1. Agentic AI for End-to-End Automation

Fully autonomous agents will soon design, refactor, and optimize pipelines in natural language—negotiating with other agents to maintain data reliability across domains.

2. GraphRAG and Knowledge Graph Integration

Graph-based retrieval systems enable richer context correlation, anomaly tracing, and decision reasoning—setting the stage for true AI-native data ecosystems.

3. FinOps and Sustainability

Orchestration will become carbon-aware, dynamically routing workloads based on renewable energy availability and cost-performance tradeoffs.

4. Conversational DataOps

Voice and chat-driven interfaces will democratize data orchestration, allowing business users to monitor or modify pipelines using natural language prompts.

Conclusion: The Data Renaissance

The convergence of AI, data orchestration, and automation marks a turning point for data engineering.

The future isn’t just about faster ETL or smarter models—it’s about self-aware systems that orchestrate themselves.

Organizations that thrive in 2025–26 will:

- Embrace AI-first orchestration

- Invest in context engineering and observability

- Build distributed, cost-optimized architectures

- Encourage human-AI collaboration over replacement

As we enter this new phase, one truth stands out:

The future of data isn’t automated—it’s intelligent.

The question isn’t whether to adopt AI-powered orchestration, but how quickly you can make your data systems think for themselves.

%20Choosing%20Between%20Apache%20Iceberg%20and%20Delta%20Lake.jpg)